Focus

America Rewritten

LATEST

Special Live Panel Discussion on Defending the Constitution: Why It Matters Now More Than Ever

The Constitution details our rights as Americans and safeguards us against tyranny.

|

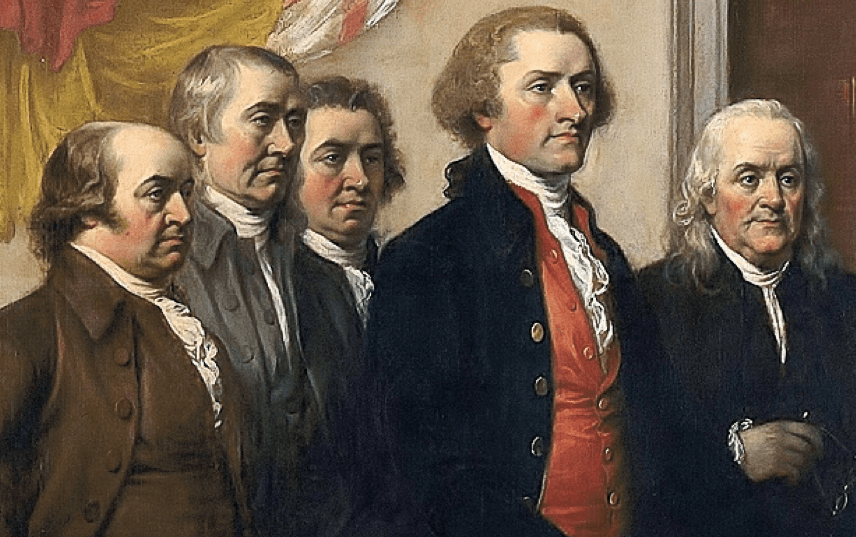

America Rewritten: What the World Would Lose if the US Constitution Was Erased | Documentary

The Constitution of the United States established the American form of government and radically changed the ideas of where the rights of citizens originate.

|

Special Live Panel Discussion on Defending the Constitution: Why It Matters Now More Than Ever

The Constitution details our rights as Americans and safeguards us against tyranny.

|

America Rewritten: What the World Would Lose if the US Constitution Was Erased | Documentary

The Constitution of the United States established the American form of government and radically changed the ideas of where the rights of citizens originate.

|