A number of federal government agencies said on April 25 that they have the power to prevent unlawful “bias in algorithms and technologies” marketed as artificial intelligence (AI).

The agencies pointed to AI use by both private and public entities to make “critical decisions that impact individuals’ rights and opportunities, including fair and equal access to a job, housing, credit opportunities, and other goods and services.”

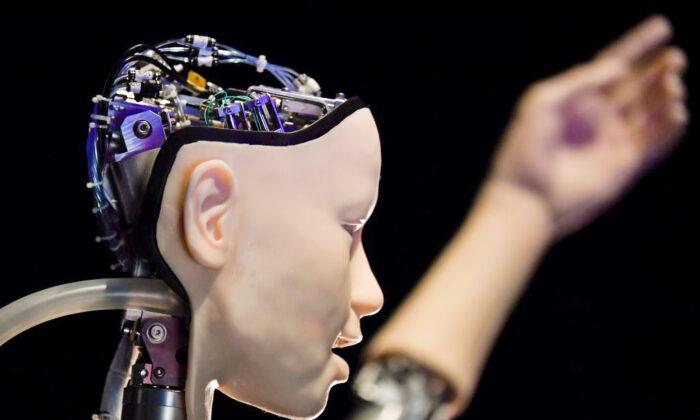

However, they stressed that while such tools provide technological advancement, “their use also has the potential to perpetuate unlawful bias, automate unlawful discrimination, and produce other harmful outcomes.”

The four agencies then pointed to a number of steps they have already taken to protect American consumers from some of the negative aspects of increasingly more advanced AI, such as abusive use of the technology, repeat offenders’ use of AI technology, the utilization of algorithmic marketing and advertising and “Black box” algorithms, in which the internal workings are not clear to most people, including, in some cases, the developers themselves.

They went on to warn that companies that are already utilizing AI technology must do so in compliance with laws and regulations currently in place.

AI Tools Can ‘Turbocharge’ Fraud, Discrimination

“We already see how AI tools can turbocharge fraud and automate discrimination, and we won’t hesitate to use the full scope of our legal authorities to protect Americans from these threats,” said FTC Chair Lina M. Khan. “Technological advances can deliver critical innovation—but claims of innovation must not be cover for lawbreaking. There is no AI exemption to the laws on the books, and the FTC will vigorously enforce the law to combat unfair or deceptive practices or unfair methods of competition.”The agencies said they are also looking into other ways to prioritize “digital redlining”—in which technology is used to further marginalize or discriminate against specific groups—including bias in algorithms and technologies marketed as AI.

As part of that effort, the CFPB is working with federal partners to protect homebuyers and homeowners from “algorithmic bias” in home valuations and appraisals, the agency said.

“As social media platforms, banks, landlords, employers, and other businesses that choose to rely on artificial intelligence, algorithms, and other data tools to automate decision-making and to conduct business, we stand ready to hold accountable those entities that fail to address the discriminatory outcomes that too often result,” said Assistant Attorney General Kristen Clarke of the Justice Department’s Civil Rights Division.

“This is an all-hands-on-deck moment and the Justice Department will continue to work with our government partners to investigate, challenge, and combat discrimination based on automated systems,” Clarke added.

“A handful of powerful firms today control the necessary raw materials, not only the vast stores of data but also the cloud services and computing power, that startups and other businesses rely on to develop and deploy AI products,” Khan said. “And this control could create the opportunity for firms to engage in unfair methods of competition.”

DHS Forming AI Task Force

The joint statement comes as the Biden administration and other lawmakers are considering new AI regulations amid an explosion in the use of such technology, most notably OpenAI’s ChatGPT, which can generate human-like conversations and texts.The task force will also explore implementing AI systems to help protect against China’s malign economic influence and advance safety, security, and economic prosperity in the Arctic and Indo-Pacific regions, according to DHS Security Alejandro Mayorkas.

“The profound evolution in the homeland security threat environment, changing at a pace faster than ever before, has required our Department of Homeland Security to evolve along with it,” Mayorkas said in a statement on April 21.

“We must never allow ourselves to be susceptible to ‘failures of imagination’’... ”We must instead look to the future and imagine the otherwise unimaginable, to ensure that whatever threats we face, our Department – our country – will be positioned to meet the moment,” he added.