Computers can recognize objects in photos and other images, but how well can they “understand” the relationships or implied activities between objects?

Researchers have figured out a method of evaluating how well computers perform at that task.

The team describes its new system as a “visual Turing test,” after the legendary computer scientist Alan Turing’s test of the extent to which computers display human-like intelligence.

“There have been some impressive advances in computer vision in recent years,” said Stuart Geman, professor of applied mathematics at Brown University. “We felt that it might be time to raise the bar in terms of how these systems are evaluated and benchmarked.”

Traditional computer vision benchmarks tend to measure an algorithm’s performance in detecting objects within an image (the image has a tree, or a car, or a person), or how well a system identifies an image’s global attributes (scene is outdoors or in the nighttime).

“We think it’s time to think about how to do something deeper—something more at the level of human understanding of an image,” Geman said.

Yes or No Questions

For example, it’s one thing to be able to recognize that an image contains two people. But to be able to recognize that the image depicts two people walking together and having a conversation is a much deeper understanding.

Similarly, describing an image as depicting a person entering a building is a richer understanding than saying it contains a person and a building. The system Geman and his colleagues developed, described this week in the Proceedings of the National Academy of Sciences, is designed to test for such a contextual understanding of photos.

It works by generating a string of yes or no questions about an image, which are posed sequentially to the system being tested. Each question is progressively more in-depth and based on the responses to the questions that have come before.

For example, an initial question might ask a computer if there’s a person in a given region of a photo. If the computer says yes, then the test might ask if there’s anything else in that region—perhaps another person. If there are two people, the test might ask: “Are person1 and person2 talking?”

As a group, the questions are geared toward gauging the computer’s understanding of the contextual “storyline” of the photo. “You can build this notion of a storyline about an image by the order in which the questions are explored,” Geman said.

Ambiguous Images

Because the questions are computer-generated, the system is more objective than having a human simply query a computer about an image. There is a role for a human operator, however. The human’s role is to tell the test system when a question is unanswerable because of the ambiguities of the photo.

For instance, asking the computer if a person in a photo is carrying something is unanswerable if most of the person’s body is hidden by another object. The human operator would flag that question as ambiguous.

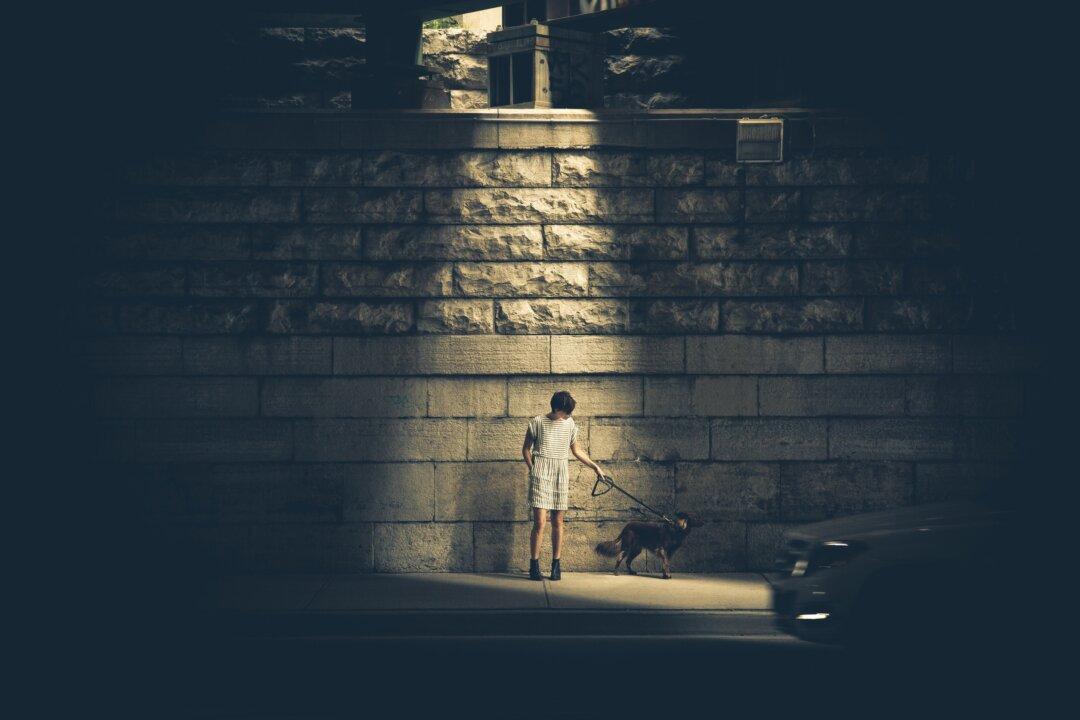

The first version of the test was generated based on a set of photos depicting urban street scenes. But the concept could conceivably be expanded to all kinds of photos, the researchers say.

‘Teaching to the Test’

Geman and his colleagues hope that this new test might spur computer vision researchers to explore new ways of teaching computers how to look at images. Most current computer vision algorithms are taught how to look at images using training sets in which objects are annotated by humans.

By looking at millions of annotated images, the algorithms eventually learn how to identify objects. But it would be very difficult to develop a training set with all the possible contextual attributes of a photo annotated. So true context understanding may require a new machine learning technique.

“As researchers, we tend to ’teach to the test,'” Geman said. “If there are certain contests that everybody’s entering and those are the measures of success, then that’s what we focus on. So it might be wise to change the test, to put it just out of reach of current vision systems.”

Researchers from Johns Hopkins University also contributed to the study.

Kevin Stacey is physical sciences news officer at Brown University. This article was previously posted on Futurity.org under CC by 4.0.