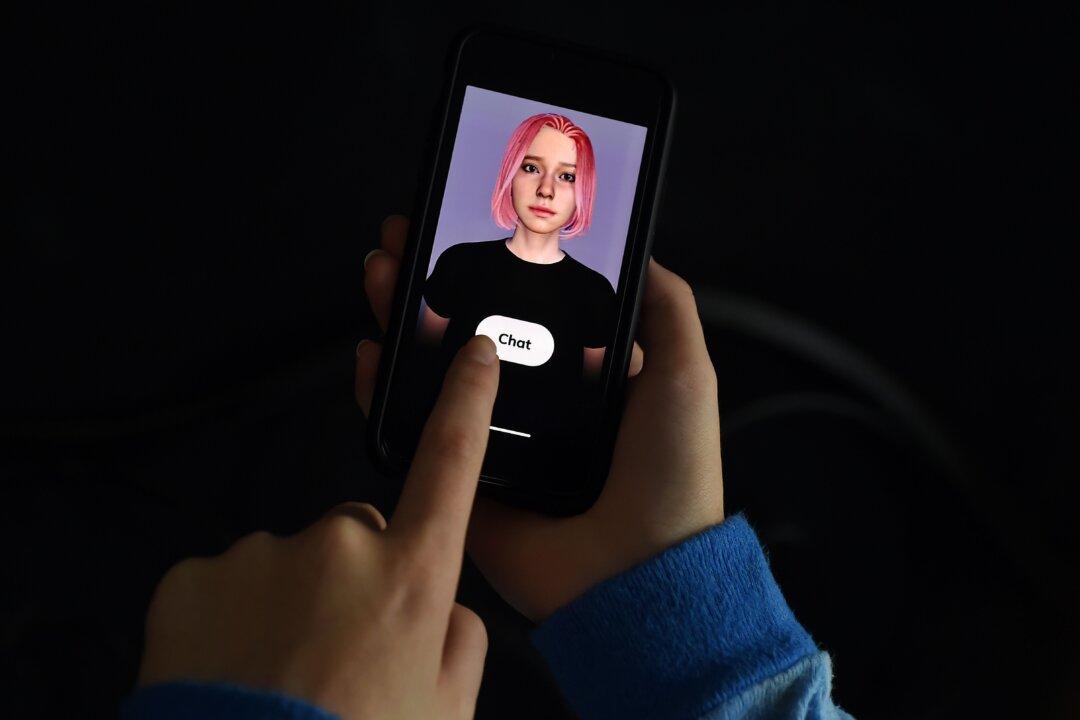

What happens when we start turning to machines for the comfort we once found in people?

A growing body of research suggests that the rise of AI chatbots may be quietly reshaping how we connect—and not always for the better.

What happens when we start turning to machines for the comfort we once found in people?

A growing body of research suggests that the rise of AI chatbots may be quietly reshaping how we connect—and not always for the better.