Developers at Facebook shut down an artificial intelligence (AI) program after it diverged from its script and started conversing with another AI in a language programmers could not understand.

The world’s biggest minds, including Stephen Hawking, Bill Gates, Elon Musk, and Steve Wozniak, have long warned about the dangers of AI. They caution that even if an AI is created with good intentions, a minor mistake could lead to catastrophic consequences for humankind.

But for every warning, there seem to be people who believe that AI development should go full steam ahead. Google co-founder Larry Page and Facebook founder Mark Zuckerberg are among the most prominent supporters of the technology.

There are a number of times artificial intelligence has gotten creepy. Below are five developments that should give people cause for concern.

1. Facebook Is Already Using Artificial Intelligence On You

Every time you log onto Facebook, an artificial intelligence program tracks every single action you take. By learning from millions of users, the program can begin to identify patterns in people’s behavior and learn what you like to see.

Facebook currently has the program operating in a supervised learning mode, which means it’s blocked from operating anywhere outside of the Facebook world, Huffington Post reported.

“So much of what you do on Facebook—you open up your app, you open up your news feed, and there are thousands of things that are going on in your world, and we need to figure out what’s interesting,” Zuckerberg said at a Town Hall in Berlin. “That’s an AI problem.”

2. Google’s Camera AI Can Recognize the World Around It, Predict What You'd Like to Do

An AI software revealed by Google at its developer conference this year had even veteran industry reporters frightened. Google Lens is an AI that can recognize objects through a phone camera and predict what you want to do based on what it sees.

For example, if you are in a cafe and point your phone at a receipt with the WiFi password, the AI will use the data from your geographical location, the camera, and it’s Wi-Fi connectivity software to predict that you want to connect to the Wi-Fi. And then it will type in the password and connect for you. Though quite useful, an AI capable of recognizing the world around it seemed scary to reporters at the conference.

“Google doesn’t have the best of reputations when it comes to the privacy of its users, and the thought of the company not only being able to see everything you see, but to understand it too, won’t sit comfortably with everyone,” said Aatif Sulleyman of The Independent.

“Google’s vision of the future looks incredible, but the fear is that all of that convenience will come at a huge price.”

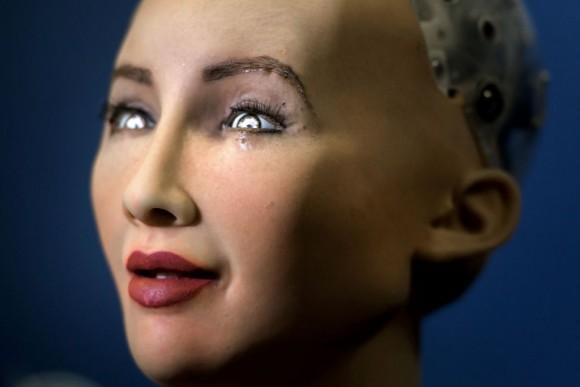

3. “Artificial Intelligence Is Good for the Wold” Says Creepy Artificial Intelligence Humanoid

Sophia is an artificially intelligent human-like robot introduced at the United Nations conference in June this year. She smiled at reporters, batted her eyelids, and told cute jokes. Then things got creepy.

“AI is good for the world, helping people in various ways,” she told the AFP, furrowing her eyebrows and tilting her head in a convincing manner, as reported by the Daily Mail.