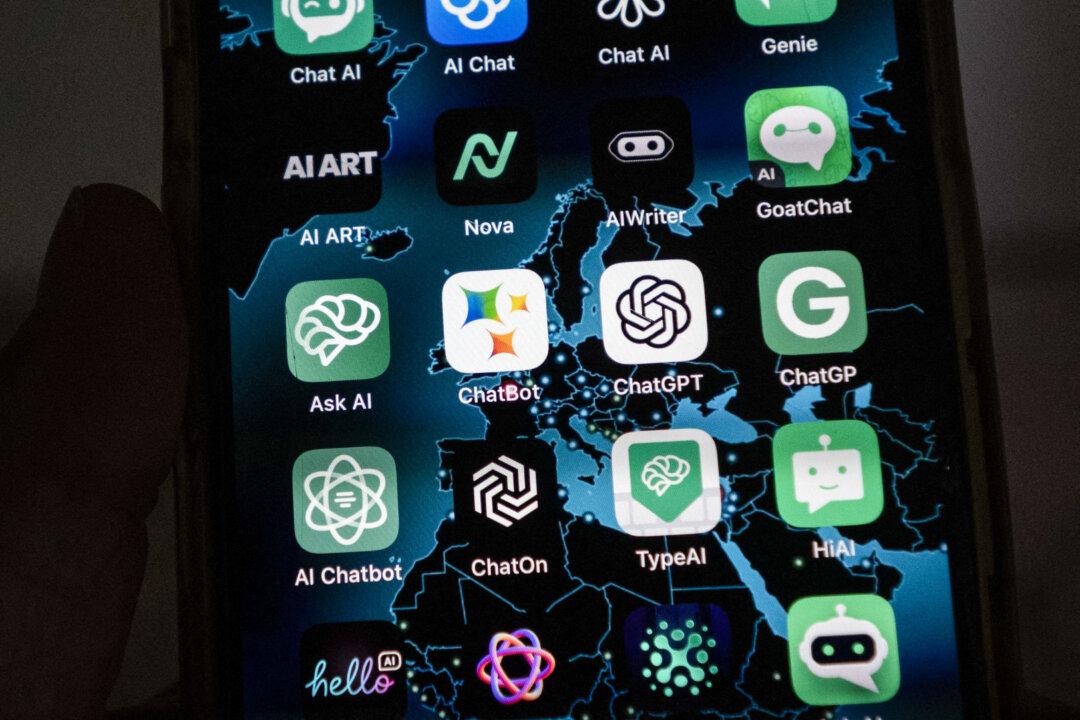

Multiple U.S. police departments issued alerts about a new iPhone feature that allows sharing contact info and images wirelessly between two closely held devices, warning that the feature could pose a risk to children and other vulnerable individuals.

“If you have an iPhone and have done the recent iOS 17 update, they’ve installed a feature called NameDrop. This feature allows you to easily share contact information and photos to another iPhone by just holding the phones close together,” the Middletown Division of Police, Ohio, said in a Nov. 26 Facebook post. “PARENTS: Don’t forget to change these settings on your child’s phone to help keep them safe as well!”